[ad_1]

Much more and much more products and solutions and products and services are having edge of the modeling and prediction abilities of AI. This posting offers the nvidia-docker device for integrating AI (Artificial Intelligence) application bricks into a microservice architecture. The primary edge explored listed here is the use of the host system’s GPU (Graphical Processing Device) methods to accelerate a number of containerized AI apps.

To recognize the usefulness of nvidia-docker, we will start off by describing what form of AI can benefit from GPU acceleration. Secondly we will current how to put into action the nvidia-docker tool. Finally, we will explain what applications are available to use GPU acceleration in your programs and how to use them.

Why utilizing GPUs in AI applications?

In the field of artificial intelligence, we have two major subfields that are utilized: device finding out and deep studying. The latter is element of a more substantial spouse and children of equipment learning techniques centered on synthetic neural networks.

In the context of deep understanding, where by operations are fundamentally matrix multiplications, GPUs are more effective than CPUs (Central Processing Units). This is why the use of GPUs has grown in new decades. Without a doubt, GPUs are viewed as as the coronary heart of deep learning since of their massively parallel architecture.

Nonetheless, GPUs are unable to execute just any program. In truth, they use a specific language (CUDA for NVIDIA) to choose benefit of their architecture. So, how to use and connect with GPUs from your programs?

The NVIDIA CUDA technological innovation

NVIDIA CUDA (Compute Unified Product Architecture) is a parallel computing architecture mixed with an API for programming GPUs. CUDA translates software code into an instruction established that GPUs can execute.

A CUDA SDK and libraries these kinds of as cuBLAS (Simple Linear Algebra Subroutines) and cuDNN (Deep Neural Network) have been developed to connect simply and successfully with a GPU. CUDA is out there in C, C++ and Fortran. There are wrappers for other languages together with Java, Python and R. For instance, deep studying libraries like TensorFlow and Keras are primarily based on these technologies.

Why utilizing nvidia-docker?

Nvidia-docker addresses the needs of developers who want to insert AI functionality to their purposes, containerize them and deploy them on servers powered by NVIDIA GPUs.

The aim is to set up an architecture that permits the growth and deployment of deep discovering types in products and services offered by using an API. Hence, the utilization charge of GPU means is optimized by building them available to multiple application cases.

In addition, we benefit from the rewards of containerized environments:

- Isolation of scenarios of each and every AI model.

- Colocation of many products with their unique dependencies.

- Colocation of the similar model under several variations.

- Reliable deployment of designs.

- Design efficiency checking.

Natively, working with a GPU in a container calls for putting in CUDA in the container and offering privileges to access the machine. With this in thoughts, the nvidia-docker instrument has been created, letting NVIDIA GPU devices to be exposed in containers in an isolated and protected manner.

At the time of creating this short article, the most recent version of nvidia-docker is v2. This model differs greatly from v1 in the subsequent strategies:

- Model 1: Nvidia-docker is carried out as an overlay to Docker. That is, to build the container you had to use nvidia-docker (Ex:

nvidia-docker operate ...) which performs the steps (between many others the development of volumes) enabling to see the GPU gadgets in the container. - Edition 2: The deployment is simplified with the substitution of Docker volumes by the use of Docker runtimes. In truth, to launch a container, it is now necessary to use the NVIDIA runtime via Docker (Ex:

docker run --runtime nvidia ...)

Take note that because of to their diverse architecture, the two versions are not suitable. An application published in v1 must be rewritten for v2.

Location up nvidia-docker

The demanded components to use nvidia-docker are:

- A container runtime.

- An offered GPU.

- The NVIDIA Container Toolkit (key aspect of nvidia-docker).

Conditions

Docker

A container runtime is demanded to operate the NVIDIA Container Toolkit. Docker is the advisable runtime, but Podman and containerd are also supported.

The formal documentation gives the installation treatment of Docker.

Driver NVIDIA

Drivers are necessary to use a GPU gadget. In the scenario of NVIDIA GPUs, the drivers corresponding to a offered OS can be acquired from the NVIDIA driver obtain website page, by filling in the info on the GPU design.

The set up of the drivers is completed by means of the executable. For Linux, use the subsequent instructions by changing the name of the downloaded file:

chmod +x NVIDIA-Linux-x86_64-470.94.run

./NVIDIA-Linux-x86_64-470.94.operateReboot the host equipment at the conclusion of the installation to just take into account the mounted motorists.

Putting in nvidia-docker

Nvidia-docker is out there on the GitHub venture webpage. To set up it, abide by the set up guide dependent on your server and architecture details.

We now have an infrastructure that permits us to have isolated environments giving access to GPU means. To use GPU acceleration in apps, a number of resources have been made by NVIDIA (non-exhaustive record):

- CUDA Toolkit: a established of tools for developing software/systems that can accomplish computations working with the two CPU, RAM, and GPU. It can be applied on x86, Arm and Ability platforms.

- NVIDIA cuDNN](https://developer.nvidia.com/cudnn): a library of primitives to speed up deep finding out networks and enhance GPU effectiveness for major frameworks such as Tensorflow and Keras.

- NVIDIA cuBLAS: a library of GPU accelerated linear algebra subroutines.

By applying these equipment in application code, AI and linear algebra responsibilities are accelerated. With the GPUs now seen, the software is capable to ship the details and functions to be processed on the GPU.

The CUDA Toolkit is the least expensive level possibility. It features the most regulate (memory and instructions) to construct custom made purposes. Libraries provide an abstraction of CUDA performance. They permit you to focus on the application growth relatively than the CUDA implementation.

At the time all these features are applied, the architecture working with the nvidia-docker service is all set to use.

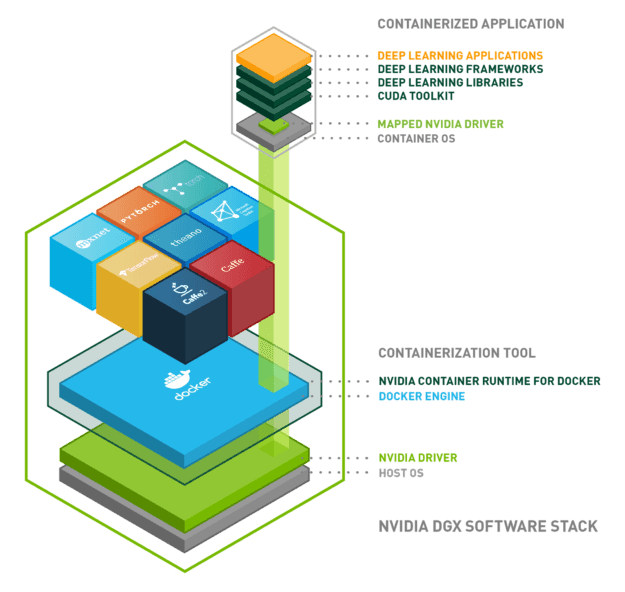

In this article is a diagram to summarize every little thing we have seen:

Conclusion

We have established up an architecture making it possible for the use of GPU resources from our purposes in isolated environments. To summarize, the architecture is composed of the next bricks:

- Running system: Linux, Home windows …

- Docker: isolation of the setting utilizing Linux containers

- NVIDIA driver: set up of the driver for the hardware in question

- NVIDIA container runtime: orchestration of the former three

- Programs on Docker container:

- CUDA

- cuDNN

- cuBLAS

- Tensorflow/Keras

NVIDIA proceeds to establish instruments and libraries all-around AI technologies, with the aim of establishing itself as a chief. Other systems may well complement nvidia-docker or may perhaps be additional suited than nvidia-docker based on the use scenario.

[ad_2]

Supply backlink

More Stories

Apple Black Friday Sales, iPhone 15 Pro rumors, Device Buying Guide

How Businesses Can Overcome Cybersecurity Challenges

Sam Bankman-Fried could face decades in jail if convicted of law violations in FTX collapse, lawyers say